|

|

|

#1 |

|

Natteravn

Join Date: Nov 2009

Location: Herford / Germany

Posts: 2,496

|

Rounding differences in mathieee and 68k FPUs

The double precision expression of

(37.0 * 100.0) / 34.0yields three different results, depending whether it is calculated by mathieeedoubbas.library, an 68k FPU or a PPC FPU. The rounding mode should always be "round to nearest", as required by ISO-C. Here is a small test program: Code:

void

fpEq (double x, double y)

{

if (x != y)

exit (1);

}

void

fpTest (double x, double y)

{

double result1 = (35.7 * 100.0) / 45.0;

double result2 = (x * 100.0) / y;

fpEq (result1, result2);

}

main ()

{

fpTest (35.7, 45.0);

exit (0);

}

result1in this test is usually calculated by the compiler, and depends on whether the compiler itself uses an FPU, or is a cross-compiler. My oberservation is that result2is evaluated as $4053d55555555554 when using the mathieeedoubbas.library for IEEEDPMuland IEEEDPDiv. A 68k FPU (tested with 68060) evaluates it as $4053d55555555555, using fmul.dand fdiv.d. While a PPC- or x86-based cross-compiler thinks result1must be $4053d55555555556! The test fails. What is correct?  I can confirm that FPCRwas 0 while running the test with 68060 FPU, so it should have been in round-to-nearest mode, as required by ISO-C. But perhaps the rounding mode has nothing to do with it, and this is just a matter of precision, which is higher in the 68k FPU than in the PPC or x86 FPU? Anyway, the mathieeedoubbas result looks most incorrect to me. Is that a bug (OS3.9, didn't check OS3.2 yet)? What rounding mode does mathieeedoubbas use? Can you even change it? EDIT: Using John R. Hauser's portable IEC/IEEE Softfloat library yields $4053d55555555556. Whatever that means... Last edited by phx; 21 July 2021 at 15:02. Reason: Softloat lib result |

|

|

|

|

#2 |

|

Moderator

Join Date: Dec 2010

Location: Wisconsin USA

Age: 60

Posts: 839

|

Why should the results always be rounded to ISO-C specifications? The Amiga RKRM was written primarily for SAS-C, but it also provided a few examples for assembler code.

IIRC the C= mathlibs didn't offer rounding options (for performance reasons). Also, for the OS3.9 mathlibs, H&P wanted to get rid of 68000/68010 + 68881/68882 hardware (hack) support but not add support for any rounding options. I don't know about OS3.1.4 or OS3.2. But you could check the NDK for these versions. For the hardware 68K FPU systems, rounding options are available but not always from the mathlibs (e.g. you roll your own FPU code). Last edited by SpeedGeek; 22 July 2021 at 14:41. |

|

|

|

|

#3 |

|

Zone Friend

Join Date: Mar 2004

Location: Middle Earth

Age: 40

Posts: 2,127

|

A bit off-topic: Isn't that why some software states in their licence/Terms of Service that you can't use the software in mission critical applications because of examples like this? Good spotting any way.

|

|

|

|

|

#4 | ||||

|

Natteravn

Join Date: Nov 2009

Location: Herford / Germany

Posts: 2,496

|

Quote:

Quote:

The mathieeedoubbas result looks really strange. It might be a bug, bad precision or different rounding mode. Quote:

Quote:

|

||||

|

|

|

|

#5 |

|

Registered User

Join Date: Jul 2015

Location: The Netherlands

Posts: 3,406

|

That is interesting for sure! I'd have expected all three to give the same result.

|

|

|

|

|

#6 | ||

|

Registered User

Join Date: Jan 2019

Location: Germany

Posts: 3,214

|

Quote:

Anyhow Let me clarify this a bit: If the mathieeedoubbas.library is run on the FPU, and is opened correctly - that is, by the same task(!) that runs the calculations, it configures the FPU as of 3.1.4 and below in "round to zero", double precision. This is *not* a particularly good choice, but it is consistent with the choice the library makes if run on the CPU. If the library is run on the CPU (no FPU available) and the version is that of 3.1.4 or below, it is implementing "round to zero", double precision. This is fast, but lazy. Now, on 3.2, things changed (and this was a major task). Now, if the library is run on FPU, it implements "IEEE aware round to nearest", and the same(!) also goes for the CPU implementation. That is rounding mode is again consistent, but the considerably more useful "round to nearest" mode is used. All again under the constraint "used correctly", i.e. you open the library from the right task, and don't touch the FPCR afterwards. Quote:

This library is also quite fine, and a good source. The mathieeedoubbas was verified with the "Paranoia test". |

||

|

|

|

|

#7 | ||||

|

Natteravn

Join Date: Nov 2009

Location: Herford / Germany

Posts: 2,496

|

Quote:

fesetround()since C99, but I'm refering to the default behaviour on startup which is defined in section F.7.3 Execution: Code:

At program startup the floating point environment is initialized as prescribed by IEC 559: (...) - The rounding direction mode is rounding to nearest. Quote:

EDIT: BTW, does it mean that the rounding mode in FPCR for an FPU could be misconfigured by the MathIEEE library? At which point? When opening the library? As far as I remember FPCR is zero (round to nearest) when a program uses the FPU without MathIEEE libraries. Quote:

Quote:

Last edited by phx; 23 July 2021 at 15:15. Reason: FPCR |

||||

|

|

|

|

#8 | ||

|

Registered User

Join Date: Jan 2019

Location: Germany

Posts: 3,214

|

Quote:

Quote:

If you use the FPU without the math libraries, then the FPU remains unitialized. A NULL FPU state transitions on the first FPU usage to a state where the FPCR is 0, hence extended precision, round to nearest. Opening the math libraries changes that to round to nearest, double precision. While round to zero, extended precision - round to zero, double precision is identical to a single round to zero step (hence, the FPU precision does not matter), it does matter for IEEE aware round-to-nearest (the two rounding steps one after another could yield a result different from one rounding step), and it is hence crucial that the FPU precision is setup correctly. This implies that you cannot use mathieeesingbas and mathieeedoubbas from the same task (or, only if you re-open the library). Are you sure? Hauser's SoftCPU should be rather high quality. IEEE aware round-to-nearest is unfortunately non-trivial due to its additional bits (round, guard, sticky-bit) you need to keep care of. |

||

|

|

|

|

#9 | ||||||

|

Natteravn

Join Date: Nov 2009

Location: Herford / Germany

Posts: 2,496

|

Quote:

Quote:

Quote:

Does the OS do any FPU initialization, when a new task is launched? Quote:

Quote:

Which means I cannot use mathieeesingbas for floatand mathieeedoubbas for doublein the same task? This is quite common, I would say. And I always expected that to work. Quote:

Code:

Lest this program stop prematurely, i.e. before displaying

`END OF TEST',

try to persuade the computer NOT to terminate execution when an

error like Over/Underflow or Division by Zero occurs, but rather

to persevere with a surrogate value after, perhaps, displaying some

warning. If persuasion avails naught, don't despair but run this

program anyway to see how many milestones it passes, and then

amend it to make further progress.

Answer questions with Y, y, N or n (unless otherwise indicated).

Diagnosis resumes after milestone Number 0 Page: 1

Users are invited to help debug and augment this program so it will

cope with unanticipated and newly uncovered arithmetic pathologies.

Please send suggestions and interesting results to

Richard Karpinski

Computer Center U-76

University of California

San Francisco, CA 94143-0704, USA

In doing so, please include the following information:

Precision: double;

Version: 10 February 1989;

Computer:

Compiler:

Optimization level:

Other relevant compiler options:

Diagnosis resumes after milestone Number 1 Page: 2

Running this program should reveal these characteristics:

Radix = 1, 2, 4, 8, 10, 16, 100, 256 ...

Precision = number of significant digits carried.

U2 = Radix/Radix^Precision = One Ulp

(OneUlpnit in the Last Place) of 1.000xxx .

U1 = 1/Radix^Precision = One Ulp of numbers a little less than 1.0 .

Adequacy of guard digits for Mult., Div. and Subt.

Whether arithmetic is chopped, correctly rounded, or something else

for Mult., Div., Add/Subt. and Sqrt.

Whether a Sticky Bit used correctly for rounding.

UnderflowThreshold = an underflow threshold.

E0 and PseudoZero tell whether underflow is abrupt, gradual, or fuzzy.

V = an overflow threshold, roughly.

V0 tells, roughly, whether Infinity is represented.

Comparisions are checked for consistency with subtraction

and for contamination with pseudo-zeros.

Sqrt is tested. Y^X is not tested.

Extra-precise subexpressions are revealed but NOT YET tested.

Decimal-Binary conversion is NOT YET tested for accuracy.

Diagnosis resumes after milestone Number 2 Page: 3

The program attempts to discriminate among

FLAWs, like lack of a sticky bit,

Serious DEFECTs, like lack of a guard digit, and

FAILUREs, like 2+2 == 5 .

Failures may confound subsequent diagnoses.

The diagnostic capabilities of this program go beyond an earlier

program called `MACHAR', which can be found at the end of the

book `Software Manual for the Elementary Functions' (1980) by

W. J. Cody and W. Waite. Although both programs try to discover

the Radix, Precision and range (over/underflow thresholds)

of the arithmetic, this program tries to cope with a wider variety

of pathologies, and to say how well the arithmetic is implemented.

The program is based upon a conventional radix representation for

floating-point numbers, but also allows logarithmic encoding

as used by certain early WANG machines.

BASIC version of this program (C) 1983 by Prof. W. M. Kahan;

see source comments for more history.

Diagnosis resumes after milestone Number 3 Page: 4

Program is now RUNNING tests on small integers:

Comparison alleges that -0.0 is Non-zero!

Since comparison denies Z = 0, evaluating (Z + Z) / Z should be safe.

What the machine gets for (Z + Z) / Z is NaN .

This is a DEFECT!

Diagnosis resumes after milestone Number 7 Page: 5

Searching for Radix and Precision.

Radix = 2.000000 .

Closest relative separation found is U1 = 1.1102230e-16 .

Recalculating radix and precision

confirms closest relative separation U1 .

Radix confirmed.

The number of significant digits of the Radix is 53.000000 .

Diagnosis resumes after milestone Number 30 Page: 6

Subtraction appears to be normalized, as it should be.

Checking for guard digit in *, /, and -.

*, /, and - appear to have guard digits, as they should.

Diagnosis resumes after milestone Number 40 Page: 7

Checking rounding on multiply, divide and add/subtract.

Multiplication appears to round correctly.

Division appears to round correctly.

Addition/Subtraction appears to round correctly.

Checking for sticky bit.

Sticky bit apparently used correctly.

Does Multiplication commute? Testing on 20 random pairs.

No failures found in 20 integer pairs.

Running test of square root(x).

Testing if sqrt(X * X) == X for 20 Integers X.

Test for sqrt monotonicity.

sqrt has passed a test for Monotonicity.

Testing whether sqrt is rounded or chopped.

Square root appears to be correctly rounded.

Diagnosis resumes after milestone Number 90 Page: 8

Testing powers Z^i for small Integers Z and i.

DEFECT: computing

(-0.00000000000000000e+00) ^ (3.00000000000000000e+00)

yielded -inf;

which compared unequal to correct -0.00000000000000000e+00 ;

they differ by -inf .

Similar discrepancies have occurred 2 times.

... no discrepancis found.

Seeking Underflow thresholds UfThold and E0.

Smallest strictly positive number found is E0 = 0e-325 .

Since comparison denies Z = 0, evaluating (Z + Z) / Z should be safe.

What the machine gets for (Z + Z) / Z is 2.00000000000000000e+00 .

This is O.K., provided Over/Underflow has NOT just been signaled.

Underflow is gradual; it incurs Absolute Error =

(roundoff in UfThold) < E0.

The Underflow threshold is 2.22507385850720000e-308, below which

calculation may suffer larger Relative error than merely roundoff.

Since underflow occurs below the threshold

UfThold = (2.00000000000000000e+00) ^ (-1.02200000000000000e+03)

only underflow should afflict the expression

(2.00000000000000000e+00) ^ (-1.02200000000000000e+03);

actually calculating yields: 0.00000000000000000e+00 .

This computed value is O.K.

Testing X^((X + 1) / (X - 1)) vs. exp(2) = 7.38905609893065000e+00 as X -> 1.

Accuracy seems adequate.

Testing powers Z^Q at four nearly extreme values.

... no discrepancies found.

Diagnosis resumes after milestone Number 160 Page: 9

Searching for Overflow threshold:

This may generate an error.

Can `Z = -Y' overflow?

Trying it on Y = -inf .

Seems O.K.

Overflow threshold is V = 1.79769313486231000e+308 .

Overflow saturates at V0 = +inf .

No Overflow should be signaled for V * 1 = 1.79769313486231000e+308

nor for V / 1 = 1.79769313486231000e+308 .

Any overflow signal separating this * from the one

above is a DEFECT.

Diagnosis resumes after milestone Number 190 Page: 10

What message and/or values does Division by Zero produce?

Trying to compute 1 / 0 produces ... +inf .

Trying to compute 0 / 0 produces ... NaN .

Diagnosis resumes after milestone Number 220 Page: 11

The number of FAILUREs encountered = 1.

The number of DEFECTs discovered = 2.

The arithmetic diagnosed has unacceptable Serious Defects.

Potentially fatal FAILURE may have spoiled this program's subsequent diagnoses.

END OF TEST.

stack: 0 bytes

|

||||||

|

|

|

|

#10 | |||||

|

Registered User

Join Date: Jan 2019

Location: Germany

Posts: 3,214

|

Quote:

Quote:

Quote:

Beware, however, that the shell does not create a new task for each program launched. Executables just overlay the shell, and thus "borrow" or "replace" the FPU state the last shell program left behind. IOWs, to use the FPU reliably, you either have to initialize it yourself, or you need to open the math libraries. Round to zero for "up to 3.1.4", round to nearest from 3.2 on. Quote:

3.1.4 configured the FPU to "round to zero, double precision" in both libraries, and this works due to the above property of rouding to zero (provable). Unfortunately, "round to zero" is not a good choice for numerics, so that was in a sense broken, too. The problem is that the math libraries, on the 68030 and 68020 at least, have to use the extended precision opcodes (68040 onwards have "single precision" and "double precision" opcodes). The only other option would be to set the FPCR on each call to the right precision. I can already hear the speed geeks cry. SAS/C got around it by using FFP for float, and otherwise "float = double" which is permissible by ANSI/C, but you really don't want to use mathffp. It's a rotten format, has no gradual underflow, and no INFs or NANs. Really bad for numerics. The way how I got around it in DMandel is to save the FPCR after opening each library, and switch it manually before calling into the single or double precision, but that's also a bad hack. Concerning ANSI/C, isn't it so that computing (float) (op) (float) should be implemented as (round to float) ((double) (op) (double)), that is, your code should rather perform the computation in double precision, and then convert the result to single precision upon storing it in the variable? Quote:

I always had the plan to also provide a mathieeeextbas and mathieeeexttrans library, and extend the API to also include conversion to and from ASCII, but I afraid this will have to wait. |

|||||

|

|

|

|

#11 | |||||

|

Natteravn

Join Date: Nov 2009

Location: Herford / Germany

Posts: 2,496

|

Quote:

Quote:

Additionally I see the problem that you get different results with a MathIEEE program, depending on the version of the library (OS3.2 or not). For example the AmigaOS native version of vbcc is linked with -lmieee, so it might calculate constants differently on compile time under 3.2 than on run time under a different version. But then it just adds to the initial problem of this thread, that also FPUs of cross-compilers seem to round differently... Quote:

Quote:

Quote:

|

|||||

|

|

|

|

#12 | |

|

Registered User

Join Date: Jan 2019

Location: Germany

Posts: 3,214

|

Quote:

The latter is not exactly correct, and may in some corner cases result in incorrect rounding, but it is quite exceptional. For the 68040 and onwards, this problem does not exist. The math libraries then use the dedicated single/double precision instructions that intrinsically round to the right precision. Even in that case opening singbas first and doubbas later will work, but will always give the right (IEEE aware rounded) results. Anyhow, there is really no good solution. Either round to zero all the way, or switch the precision on each call, or accept some mis-rounded results with the 68881/882. Currently, my choice is the latter. |

|

|

|

|

|

#13 | |

|

old bearded fool

Join Date: Jan 2010

Location: Bangkok

Age: 56

Posts: 775

|

Quote:

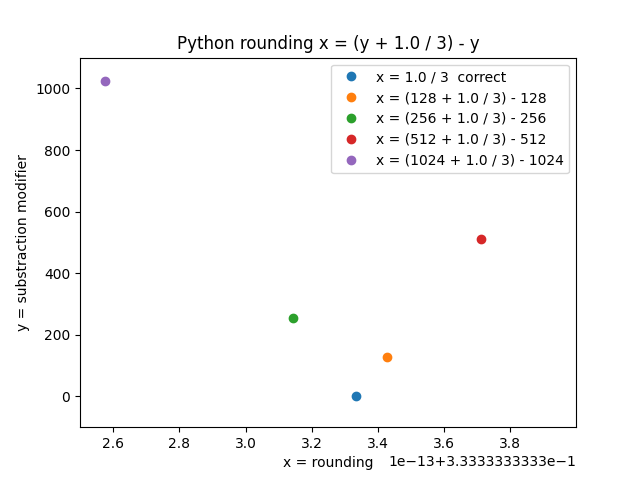

Code:

x = (100 + 1.0 / 3) - 100 y = 1.0 / 3 x == y False x = 0.3333333333333286 y = 0.3333333333333333  BTW: What happened to "Posits" (Type III Unum)? I was looking forward to the direct drop-in replacement for IEEE Standard 754 floats, better idea and easier (less silicon die area needed) to implement in hardware. Stanford Seminar: Beyond Floating Point: Next Generation Computer Arithmetic [ Show youtube player ] https://en.wikipedia.org/wiki/Unum_(number_format) Last edited by modrobert; 18 February 2024 at 17:19. |

|

|

|

|

|

#14 | ||

|

Registered User

Join Date: Jan 2019

Location: Germany

Posts: 3,214

|

Quote:

Quote:

In particular, floating point operations are typically not associative (i.e. a op (b op c) != (a op b) op c), and not distributive (a*(b+c) != a*b +a*c). Please read: https://www.itu.dk/~sestoft/bachelor...54_article.pdf |

||

|

|

|

|

#15 | ||

|

old bearded fool

Join Date: Jan 2010

Location: Bangkok

Age: 56

Posts: 775

|

Quote:

Quote:

Last edited by modrobert; 26 July 2021 at 06:49. |

||

|

|

|

|

#16 | |

|

Registered User

Join Date: Jan 2019

Location: Germany

Posts: 3,214

|

Quote:

You find a good discussion of all this in Donald Knuth's Vol II of "The Art of Programming". Due to the rather universal spread of IEEE numbers, they are the first choice for numerics - unless you have special needs. Because it is still relevant, and the caveats mentioned there still apply as of today. These are consequences of pretty much all "limited precision" number systems. The error has to go somewhere.... |

|

|

|

| Currently Active Users Viewing This Thread: 1 (0 members and 1 guests) | |

| Thread Tools | |

Similar Threads

Similar Threads

|

||||

| Thread | Thread Starter | Forum | Replies | Last Post |

| Fixed point maths, quaternions, rounding errors and normalisation. | deimos | Coders. General | 28 | 11 November 2019 13:39 |

| What are the differences between this and WinUAE? | Foebane | support.FS-UAE | 21 | 02 April 2018 21:06 |

| FPU rounding issues | phx | support.WinUAE | 6 | 12 November 2014 10:03 |

| CPUs and FPUs, get in quick! Some real bargains to be had! | amigamaniac | MarketPlace | 8 | 14 September 2009 03:49 |

| Differences between 3.5 and 3.9 | redneon | Amiga scene | 1 | 17 February 2005 12:19 |

|

|