|

|

|

#61 |

|

Registered User

Join Date: Apr 2013

Location: paris

Posts: 133

|

Hi! didn't read that thread for a while, a lot of interesting things here!

Ross, so you have a modifyed zx0 packer than can beat arjm7 on AmigAtari data files? |

|

|

|

|

#62 | |

|

Defendit numerus

Join Date: Mar 2017

Location: Crossing the Rubicon

Age: 53

Posts: 4,468

|

Quote:

However, you must remove the support for LZ4 and replace the pre-compressed ones always with ZX0. I don't think anyone would notice the speed change for pre-packed. But beware that the support for unpack-when-loading is completely to be invented/implemented. To tell the truth in the past few days I have not looked at the ZX0 code (too many other things) and I would like to try ZX5 (which I think can be ported to 68k very easily). Also I would like to understand if some a/b's ideas can be adapted. |

|

|

|

|

|

#63 |

|

Defendit numerus

Join Date: Mar 2017

Location: Crossing the Rubicon

Age: 53

Posts: 4,468

|

First release using 68k ZX0 on Amiga:

http://eab.abime.net/showthread.php?t=108712 It's implemented like 'stacker', with game cylinders packed stand-alone, in a big indexed virtual disk. The other single files are also compressed with ZX0 (apart from the initial module which is ADPCM encoded with the decoding done during the beginning of the intro, but that doesn't count  ). ).Actually in the end I had so much space left that I could also have used another packer, but that compression factor at that speed is unbeatable!

|

|

|

|

|

#64 |

|

Registered User

Join Date: Jun 2020

Location: London

Posts: 4

|

Hi guys, I did a lot of work on fast decompression for Z80 and I think some of our tech could be useful for you as well. ZX0 is an amazing format with a couple of very modern tricks, so it can compete (almost!) with zip-style compressors, even though it does not come with an entropy coder. The compressor is terrily slow, but if you can afford to lose, maybe, 0.3-0.5% compression (at most), there is a new packer written by Emmanuel Marty that is orders of magnitude faster: https://github.com/emmanuel-marty/salvador

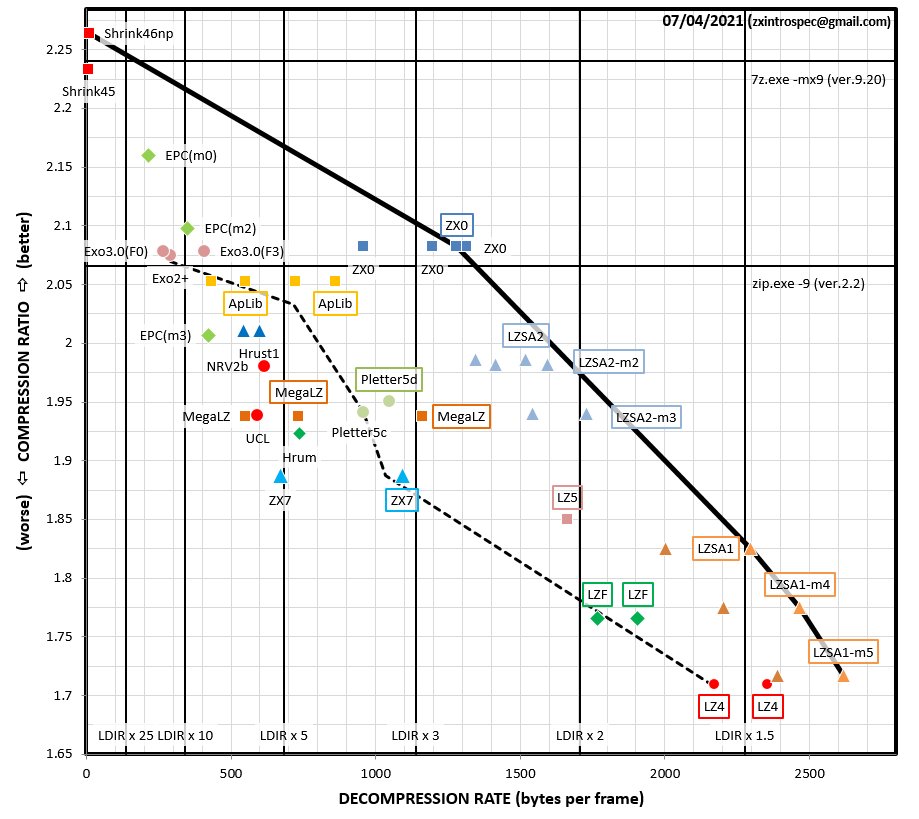

On some files it beats the original compressor too. Since you mentioned LZ4 several times in this thread, I think that you may also want to have a look at LZSA compressor, also written by Emmanuel Marty: https://github.com/emmanuel-marty/lzsa It comes with 2 compression formats: LZSA1 and LZSA2. LZSA1 was designed intentionally to compete with LZ4 and it does beat LZ4 both in compression ratio and in decompression speed (basically, it requires less work in the main loop, so correctly designed decompressor should always beat LZ4). LZSA2 is somewhere in between LZSA1 and ZX0 - compresses approximately at the level of NRV2B, but can be decompressed much faster, due to the active use of bytes and nibbles in the compressed stream. Last but not least, I created the compression ratio/decompression speed diagrams listed earlier in this thread. It is important to understand that they were generated for (mostly) my decompressors and for Z80. I have no way of testing 68k decompressors, esp in terms of the decompression speed, so your mileage may vary depending how well optimized your decompressors are. I have examples of Z80 decompressors that I could make twice as fast as they were originally, so please be careful when comparing the compressors with each other. Just any decompressor will not automatically get you onto the Pareto frontier. |

|

|

|

|

#65 |

|

Defendit numerus

Join Date: Mar 2017

Location: Crossing the Rubicon

Age: 53

Posts: 4,468

|

@introspec: thanks for the post (and welcome).

Your name ring me a bell.. probably I've seen you 'elsewhere' before  I'll check "salvador", but this sentence needs to be deepened: "The compressor can pack files of any size, however, due to the 31.5 KB window size, files larger than 128-256 KB will get a better ratio with apultra." With the original source of ZX0 it is trivial to enlarge the compression window (so much so that in my command line version it can go up to 2^23). Maybe the same thing can be done in this optimized version  , it's a focal point for the 68k if you want to achieve noticeable compression factors. , it's a focal point for the 68k if you want to achieve noticeable compression factors.Also LZSA2 could be interesting. |

|

|

|

|

#66 | ||

|

Registered User

Join Date: Jun 2020

Location: London

Posts: 4

|

Quote:

Quote:

LZSA is designed to offer multiple choices along the Pareto frontier, so it does not make much sense to focus specifically on LZSA2 or LZSA1. In situations where only compression ratio matters ZX0 would offer better compression. However, when decompression speed becomes a consideration, you typically want to compress as much as you can given a fixed decompression time. LZSA allows you to do something approximating this behaviour. This is a more up-to-date version of the diagram from earlier in the thread:  As you can see, LZSA1 and LZSA2 offer multiple points along the Pareto frontier. This is achieved by providing an option to avoid compressing some of the costlier (in decompression speed) matches, which reduces the compression ratio while increasing decompression speed. So you can decompress significant amounts of data in realtime, and if the speed is a bit too slow for your specific data, you can lose some of the compression ratio to speed things up. Or conversely, you might recognize that you have plenty of spare time left and switch to a higher-compression variation of the algorithm. Last edited by introspec; 27 November 2021 at 07:38. |

||

|

|

|

|

#67 | |

|

Registered User

Join Date: Jun 2020

Location: London

Posts: 4

|

Quote:

|

|

|

|

|

|

#68 | |

|

Defendit numerus

Join Date: Mar 2017

Location: Crossing the Rubicon

Age: 53

Posts: 4,468

|

Quote:

Everything has been thought to be as fast as possible for 68k architecture (and it is much faster that original decoder). Now the elias bits are inserted in/for two different configurations: positive or negative return numbers, depending on usage; as I use unrolled code it's not a problem at all, but you cannot use anymore subroutines to extract bits. The single bit 'unrelated' to the offset (which is used for the length of the match and is in the LSB position of the read byte) is moved in main bitcode stream, and in its place, but in the MSB position, is inserted a (Elias) bit that tells me if I have a short (7-bit) offset or a long one, to be completed with the streamed elias-gamma bits (to form a possible 2^23 offset, encoded as negative). The exit is moved to literals run for two reasons: - can eventually shorten the bitcode (and add a free helper for in-place unpack) - it's the only way to handle >32k literals that can overflow the bitcoding It basically add an escape code to copy till 163835 (repeatable 'ad infinitum') bytes at a time; it requires exactly two bytes +1bit (to re-align to bit stream). If your encoding ends with a match (both rep and normal) then add the specific 31-bit code for the output without further copying (direct exit). If your encoding ends with literals you can free use a copy till 32764 bytes. If your encoding ends with >32764 you can use the escape code (literals overflow) until you end the queue. During normal code flow you can use directly till 32769 byte of literals. This way there is also an escape code usable to facilitate in-place unpack. Well, yes, can be confusing without examples, but 68k handle it very well with some implicit properties  Probably the source code is better for a correct understanding (both encoder and decoder). |

|

|

|

|

|

#69 | |

|

Registered User

Join Date: Jun 2020

Location: London

Posts: 4

|

Quote:

|

|

|

|

|

|

#70 |

|

Registered User

Join Date: Apr 2019

Location: UK

Posts: 172

|

Hi. Sorry to resurrect this thread, but it seemed like the best place to ask.

Does anyone have a 68000 ZX0 decompressor that handles block sizes > 64K (word size)? I've been using Einar Saukas' zx0 for compression (on PC) and Emmanuel Marty's unzx0_68000 for decompression at runtime. However, I think I've just hit the large block issue decompressing a large file containing very long blocks of zeros (repacking Venus game). Currently trying to make the changes myself, but I'll probably make a mess and figured this might already be available somewhere. Thanks for any pointers. |

|

|

|

|

#71 | |

|

Registered User

Join Date: Apr 2019

Location: UK

Posts: 172

|

Quote:

The only issue is that the compressor does not report the 'delta' required for decompress-in-place. For my particular file, zx0.exe reported a delta of 2. This value caused the decompression routine to fail for the Salvador-compressed file, but it worked with a delta of 4. Not very scientific, but it works. Last edited by hop; 04 January 2024 at 20:23. Reason: clarity |

|

|

|

| Currently Active Users Viewing This Thread: 1 (0 members and 1 guests) | |

| Thread Tools | |

Similar Threads

Similar Threads

|

||||

| Thread | Thread Starter | Forum | Replies | Last Post |

| Zip packer corrupting files ? | Retroplay | support.Apps | 13 | 23 July 2011 12:17 |

| old soundeditors and pt-packer | Promax | request.Apps | 7 | 14 July 2010 13:21 |

| Pierre Adane Packer | Muerto | request.Modules | 15 | 21 October 2009 18:03 |

| Power Packer PP Files HELP | W4r3DeV1L | support.Apps | 2 | 30 September 2008 06:20 |

| Cryptoburners graphics packer | Ziaxx | request.Apps | 1 | 06 March 2007 10:30 |

|

|